Llama 3.1 chatbot to query my blog pages (or any other archive of web sites)

I wrote a Python/Jupyter Notebook that can be used to find information in a list of web pages. You can download and use it on your local computer. It is free and it works,

This post is exclusively on AI stuff. It describes the performance of a Python/Jupyter Notebook that can be used to extract information from a list of web pages. You can download this notebook and use it on your local computer. It uses an open-source LLM, it is free, it works, and I provide all the information that you need.

You will need to know Python but you do not need to know anything on AI to run this program.

Last year I tried to write a program to query my blog posts. If it could access my blog pages, then the same program could also be used to access other knowledge bases, e.g. an engineering textbook. As I posted in February 2024, I paused this work. I had two reasons:

a. The OpenAI API interface was not only clumsy but buggy too; and

b. If I continued that work and wrote a textbook using OpenAI LLM, then everybody wanting to access my textbook would need an OpenAI license and pay OpenAI every time they checked something in the textbook.

I needed a free open-source alternative but none of the free models at that time was good enough. Then Meta released Llama. It is not perfect but it has the potential to do what I want and it is free. In this post, I demonstrate the performance of Llama 3.1 on my blog posts. If you want, you can try it on other web site archives. The program is offered as a Jupyter/Python notebook and is plug-compatible with any web-based knowledge base that can be read by langchain WebBaseLoader.

Input Box

The program runs as a Jupyter notebook and receives inputs using the python input function. The following is a screen dump of this process:

Once you start, you can keep entering different queries and/or commands in a loop. All inputs and outputs are automatically saved on your disk.

The technical details are at the end. First, I will show what you can do with it. If that is of interest, then you can continue with the technical details.

Example Run

Query: What are typical electrolyser efficiencies?

(The program first expands this into a paragraph because it is difficult to locate the right document using a single sentence. The expansion is done by asking Llama itself to answer the question based on its own internal knowledge. You may remove this step by using ghew command !XF)

Expanded Query: Typical electrolyser efficiencies vary depending on the type of electrolysis technology, stack design, and operating conditions. In general, alkaline electrolyzers, which are widely used for industrial-scale hydrogen production, typically operate at an efficiency of around 70-80%. Proton exchange membrane (PEM) electrolyzers, commonly used in fuel cell applications, can achieve efficiencies ranging from 60% to 90%, while solid oxide electrolyzers (SOEs), often employed in power-to-gas and chemical synthesis, can reach efficiencies of up to 95%. Other types of electrolyzers, such as anion exchange membrane (AEM) and liquid junction electrolyzers, may have slightly lower efficiencies. Overall, the efficiency of an electrolyzer is influenced by factors like current density, operating temperature, and gas purity, making it important to consider these variables when selecting or designing an electrolysis system.

Short Listed blog pages:

https://halimgur.substack.com/p/let-us-talk-about-elon-musk Section #3

https://halimgur.substack.com/p/sadness-of-a-ghost-town Section #2

https://halimgur.substack.com/p/lying-oracles-and-the-anyone-and Section #6

(These are not the right pages and the program answer is “I do not know”. I decide to do a text search first.)

Query : !FElectrolyser

(I ask the program to find all pages that contains the word ‘Electrolyser’)

Short list

https://halimgur.substack.com/p/retirement-a-journey-of-continued Section #1

https://halimgur.substack.com/p/the-importance-of-elite-consensus Section #3

https://halimgur.substack.com/p/retirement-a-journey-of-continued Section #1

Query : !A0S0

(I ask the program to answer Question #0 using the first short listed page. It rovides the following answer.)

Answer : According to the provided text, academic papers use electrolyzer efficiencies of 65% or higher in their economic scenario generations.

This is not really the answer I want. I ask again with more elaboration.

Query: According to the provided text, academic papers use electrolyzer efficiencies of 65% or higher in their economic scenario generations. What is the efficiency for an industrial electrolyser?

(Then the program comes up with the following answer)

Answer: According to the provided text, the efficiency calculated for ACES Delta's Hydrogen Electrolyzers is 55.6%. This is compared to academic papers that use electrolyzer efficiencies of 65% or higher in their economic scenario generations.

So, the answer is: The efficiency for an industrial electrolyser mentioned in the academic papers is 65% or higher.

I do not like this answer either. It is muddled. I guess, it is the adjective “typical” that is making it difficult for the LLM. So I remove it from the query.

Query : Answer”What is the efficiency of an electrolyser?” using the first short-listed page.

To this question I get a concise (and correct) answer.

Answer: According to the provided text, the efficiency (eta) of the electrolyzer is 55.6%.

I ask on a different topic:

Query : What is the cost of sending 1 kg to the orbit?

It first expands this question (see above as in the first query) and searches for the 3 pages with embedding vectors closest to the embedding vector for the expanded text. This time, it is successful in finding the correct pages:

https://halimgur.substack.com/p/monster-wave-soon-to-hit-your-shore Section #4

https://halimgur.substack.com/p/monster-wave-soon-to-hit-your-shore Section #2

https://halimgur.substack.com/p/monster-wave-soon-to-hit-your-shore Section #5

But it fails to generate the correct answer. Referring to the first document, it answers:

I do not know. The provided text does not mention the cost of sending 1 kg to the orbit. It only mentions the projected cost of sending a payload size up to 100 tonnes at $600k/tonne, but it does not provide information about smaller payloads like 1 kg.

This is interesting in terms of demonstrating the limits to the reasoning ability of this small LLM. I ask the same question again in different units:

Use the first short-listed page to answer this question: “What is the cost of sending 1 tonne to the orbit?“

Then the correct answer is found:

I can answer that! According to Figure 2, if we can send payload sizes up to 100 tonnes at a cost of $600k/tonne, then the cost of sending 1 tonne would be:$600,000 / 100 = $6,000 per tonne

Reflections

In a RAG (Retrieval Augmented Generation) application, it is important that the program

(a) is able to identify the most relevant document section(s);

(b) interprets those documents correctly to find the answer; and

(c ) answers “I do not know”, if the documents do not have the information needed.

Llama 3.1 is hopeless in the first task. In fact, I found that a much simpler embedder "nomic-embed-text1" is better in finding the most similar documents. Llama 3.1 is not alone in this weakness. None of the existing LLMs are able to do this job. OpenAI ChatGPT, for example, was better but even it made too many mistakes. In fact, this is why we do not see a proliferation of customer service chatbots even though two years ago this seemed like the most immediate commercial application of GPTs. The literature suggests supporting the LLM embedding search by using other ways of knowledge representation, e.g. graph nets. Unless you are working on a very large knowledge base, using text search as I did in my program is probably sufficient.

Llama 3.1 is a bit better in the second task. Because it is a relatively small model, its reasoning ability is not as developed as that of ChatGPT or Claude. Therefore, you may need a few iterations to get an answer. This is not a big problem for me because I think it can be improved by better design of the text database.

Llama 3.1 was very good on the third task. It never hallucinated an answer and said “I do not know” when the document did not include the information. For my purposes, this is the most important bit because I can compensate for deficiencies in the first two tasks but there is not much I can do when the model hallucinates.

Technical

If the above was of some interest and if you think you may want to try it on your own computer, continue reading.

This section tells you what you need to do to run the program blogq on your computer. You do not need to pay OpenAI or any other provider because the LLM will be running on your local computer.

Computer

My computer is an iMac with an Apple M1 chip and 16GB Memory. The operating system is Sonoma 14.7.

Python

My Python version is Python 3.11.1. I always use a virtual environment for even the smallest programs. This habit uses a bit of disk space because there is a copy of Python in every program folder but the benefits are huge. In VS Code, you can create a virtual environment by pressing [COMMAND][SHIFT]{P} and selecting Python:Create Environment… from the menu.

VS Code

My preferred code editor and the program development environment is VS Code. Microsoft was generous to make this free and it is now the default editor for almost all software developers.

Jupyter Notebooks

My program is configured as a Jupyter notebook. VS Code fully supports Jupyter notebooks.

Ollama and langchain

There are open source Python libraries that I use to access Llama 3.1. You can install them in your virtual environment by using pip install. At the top of the notebook you will see a cell with pip commands all commented out. You have to uncomment and execute those pip commands.

User Interface

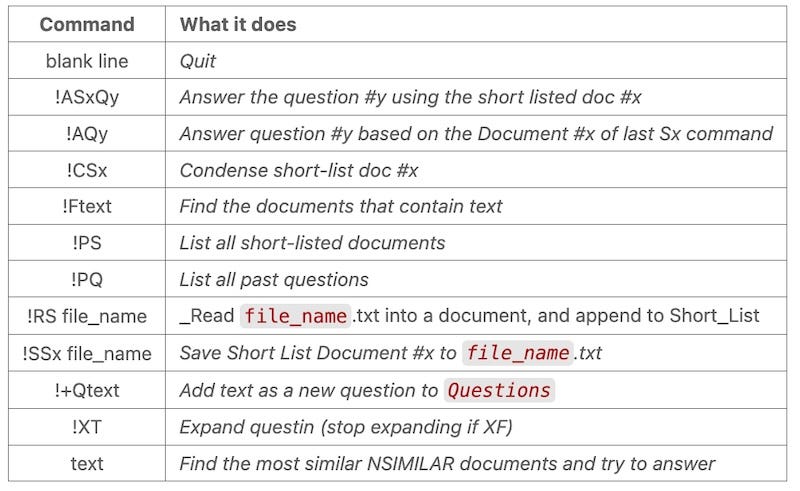

The execution is by entering different queries and/or commands in a loop in the input box as shown in the image at the top of the page. The following figure shows the list of commands:

I know that this is a very clumsy user interface. It is easy to polish it up to a regular chatbot but at this stage this is good enough.

The best way to see the program response is by monitoring the log file (see the next section for what these are).

Do this as follows:

Start the notebook

blogqin one VS Code windowStart a new VS Code window next to it

Drag and drop the log file for the current session into this new VS Code window

VS Code will automatically refresh the display in the log file window as you keep asking in the

blogqwindow.

Log files

The interaction with the program is automatically logged. The log files are in the folder log. They are named as log1.md, log2.md, log3.md, etc. with the name automatically incremented at the beginning of the query. Delete the files manually to restart the count.

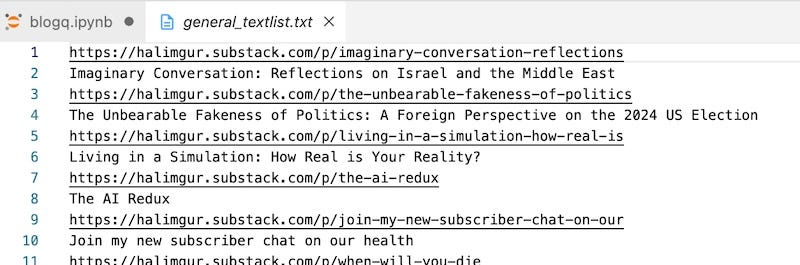

List of texts

The file general_textlist.txt in the data folder includes the URL addresses and the titles of all my blog pages that are accessed by this program.

If you want to try the program on another web site, you need to replace the contents of this file with pages of your choice.

github

Download the notebook and the associated files from github.com/Gurgenci/blogq . Download it as a zip file and extract it to a local folder on your computer.

Questions?

If you have problems in getting this notebook running on your computer, please ask by posting your question as a comment below.

A model offered by the AI company Nomic. This embedder is available through Ollama.

Thank you for the question. It gives me the opportunity to go into more technical detail, which I avoided to keep the post accessible.

My blog pages are already clustered by separator "-+-+-+-+". This is because my aim in this exercise is to identify the best way of organising the source text to get 100% reliable LLM performance.

My 57 web page addresses are split into 411 chunks of text by using that separator string. The similarity search is performed on that 411. I started with nomic because it is very quick (1 minutes compared to 25 minutes by Llama embedder). This makes it easy when you are debugging your script. When I switched to Llama after the script got stabilised, I noticed that Llama was worse than nomic in identifying the right chunk for my queries.

I use "cosine similarity" for distance measurement between two embedding vectors. I tried other metrics but decided on cosine similarity (which is basically the dot product of the two vectors).

Please ask again if the above is not clear enough.

Can you elaborate on the task you thought nomic-embed-text outperformed the others? Is there a technical distinction between finding the correct document / sections of the document, or clusters of content within one long document?

Example: you have a long, meandering multi-day diary by a man marooned on an island. His writing could conceivably be clustered conceptually into 'obtaining food' 'obtaining water' 'creating shelter' 'improving visibility to planes'. But it is just one long document (which you could divide arbitrarily into chunks).

If you wanted LLama to summarise this and similar diary entries by first identifying all these relevant clusters, and then summarising the diary content for each cluster, are you saying the Nomic product would outperform LLama at (the clustering part) of this task, or is there a technical distinction between this task and finding the relevant document section(s)?