Please subscribe; please share. There is no downside because your email will not be used for other purposes. You will receive no advertisements.

Copy and share the link, post it on another platform. It is not that I get money with more subscribers, but it makes me happy when more people read it.

-+-+-+-+

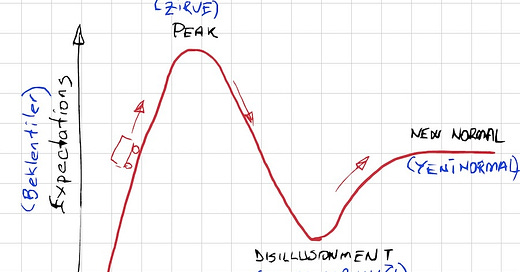

Most new innovations go through five phases, known as the Gartner Hype Cycle:

Start in a state of euphoria, excitement and high expectations,

Expectations peak yet with no results

Disillusionment as inflated expectations are not met,

Realism as we learn the potentials and limitations of the new technology,

Finally, the stage I call New Normal. The new technology starts producing useful results. The results are less than the peak expectations but still valuable and make life better for us. All the hoopla is soon forgotten and we start living with this new normal.

With Large Language Models (LLMs), the expectation peak was reached sometime towards the end of last year. We now are moving down the slope. Even the panic-mongerers slowed down. For example, I hardly see Harari on YouTube these days spruiking how the LLMs (he calls it AI) will be the end of the world.

Rosy announcements from the developers are still coming but they do not cause the same kind of splash (or should I say whiplash) as they did last year. For example, OpenAI last week announced their impending launch of a new model called Strawberry that is said to have superior reasoning capability. The internal name for this model used to be Q* and you may remember from last year that people were attributing the sacking of OpenAI CEO Altman to differences of opinion on Q*, which was a powerful general purpose AI and the rumor was that some Board members were scared that there was no protection against it taking over the world. Now, OpenAI announces that the model is being released and the public opinion is a mighty shrug.

I think part of the disappointment is the lack of progress towards so-called “killer apps”. There are useful niche applications but we have yet to see any of the mass market apps that were promised at the peak of the AI hype.

I had a balanced optimism when the ChatGPT first came out. I copy from my 24 March 2023 post:

Computer programs … may learn how to speak and write like engineers, but not how to calculate…LLMs cannot be engineers using only the transformer technique but they can learn to run the necessary programs for an engineering task.

When this happens, it is not going to be Artificial General Intelligence but anothet tool to help human engineers create better products.

More or less, I am still of the same opinion.

Some readers may remember my own endeavours towards using LLMs to produce an interactive textbook of mechanical design. I used OpenAI API to practice and learn embeddings, retrievals, and a chatbot answering queries on my blog posts. The instability and the bugginess of the OpenAI API interface was one of the reasons that my progress has been slow. ChatGPT is a brilliant product and a credit to the OpenAI but OpenAI API seems to be being managed by a different company.

Llama

Yan Le Cun (VP and Chief AI Scientist at Meta) never believed LLMs leading to Artificial General Intelligence(AGI). He must have convinced Zuckerberg that there was no point in Meta trying make money from LLMs. They develop and release powerful LLMs as open source material. I suppose they do so to prevent the likes of OpenAI and Anthropy to get rich on the offerings of their closed-source LLMs and to become future competitors to Facebook in other areas.

Llama 3.1 is their latest release. Using langchain and Ollama, I am able to run Llama 3.1 model Meta-Llama-3-8B on my Mac M1 computer. The model has 8 billion parameters, a context length of 8192 and an embedding vector size of 4096. At the moment, I am trying to write a program to use it to query my blog posts. I did this using the OpenAI API but one needs to pay OpenAI every time a call is made. This is not expensive when developing software but could be prohibitive for the users if I manage to develop it to product level.

Llama Performance

You may remember my post on asking Claude and ChatGPT about cantilever stresses.

Now I do the same with Llama 3.1. Remember, this is running on my local computer. I run it as a Jupyter notebook.

# !pip3 install langchain_community

# Import 0llama

from langchain_community.llms import Ollama

# Create a model instance

llm = Ollama(model="llama3")

# The prompt

query_text="To solve this problem, I want you to usethe Chain of Thoughts strategy. First, breakdown the problem into \

a series of steps, explaining your thought process at each step. Then provide the final answer.\n\n\

`A cantilever beam has a circular cross-section. Its diameter is 10 mm, What is its cross-sectional area?`"

s=llm.invoke(query_text) # Ask llama3

It took 25.2 s on my Mac M1 to produce the following answer:

I used the `md` (markdown printing) function to print it. I then asked my next question:

follow_up="The length of this cantilever beam is 1m. A load of 100N is acting on its free end. What is the maximum stress caused in the beam?"

s=llm.invoke(follow_up)

md(s)

It took 37.4 s to produce the following response:

It obviously forgot the context of the first question. It assumed a rectangular beam. This is my fault, The context is maintained in ChatGPT and Claude by the API interface. Here, I have to it myself. So, I try again:

It is the wrong answer but you can see how it is trying. It is not too bad for a local model without using commercial servers. Remember, Anthropy’s Claude also failed to answer correctly this question.

I will continue working with llama.

In summary, I do not think we will have AGI soon. If someone figures out how to make LLM separate categorical from probabilistic knowledge, I do not think this is going to take too long, we will have AI tools in specific domains helping human practitioners. Some of them will be tools running locally on your laptops or smart phones. As I said last year and as I keep hearing from Microsoft (they must be following my blog), Textbooks is all you need.

I had discussed this with ChatGPT when it was first introduced. I thought I should have the same conversation with the new and more powerful GPT-4o. I copy in a footnote1.

PS: While finalising this post, Alibaba introduced Qwen2-VL, a new vision-language AI model. People say that it is better than GPT-4o in some benchmarks.

Short Takes

Manifest privilege

I learned reading the 11 February 2024 issue of Adrian Tout’s newsletter that the US economy (measured by Real GDP) represents less than 18% of the world’s total economic output but the US stock market accounts for about 70% of the global market capitalization. This implies a belief by the global sharemarket investors that a small portion of the global economy (18%) will generate more than three times the profits the companies of the rest of the world generate2.

The distortion could be due to the inflated tech stocks. The chart shows how the top 7 US tech stocks are on a path separate from the rest:

Another reason for why US share prices are more expensive than the rest could be the perception of risk. People buying US shares may be paying for a risk premium because they believe that it is hard for another country to take actions to hurt US economy and US share market but not vice versa.

EGS Revival

Two US companies Sage Geosystems and Fervo Energy are part of the EGS revival in the States. Sage Geosystems will build a 150-MWe geothermal plant to power Meta servers. Fervo signed power purchase agreements (PPAs) with Southern California Edison to provide up to 320 MW of electricity by 2028. If these two projects are successful, they may signify a restart of the interest in EGS geothermal.

EGS means Enhanced Geothermal Systems. You can also refer to it as deep geothermal. At depths of 3000m and deeper, the rocks are hot almost everywhere and if you can bring the heat to the surface at an affordable price, you solved the energy problem. If you want to learn more, you can read this article I wrote for The Conversation in 2013. Everything there is still true except that, in Australia at that time, we failed to find a way of bringing all that heat to the surface at an affordable price. Sage Geosystems and Fervo Energy may be on a road to solve that problem. Therefore their experience needs to be followed.

Copper String cost blow-out

Australian governments started discussing the Copper String project twenty years. The idea was to connect the Mt Isa-Cloncurry mining district to the Eastern Australia Power Grid (NEM network).

Here are some parameters:

Length = From Townsvill to Mt Isa, the transmission line length is 840 km. An additional 200-km will be built to connect new generators to Copper String.

Transmission Capacity = The maximum transmission capacity is not mentioned in documents in any documents, neither the current capacity for the line. Typical values for high-voltage lines vary from 1000 to 5000 Amperes. The curernt-carrying capacity of a high-voltage line is limited by the line temperature. North Queensland is hot. Therefore, I expect a value less than 2000 A. This means a approximate transmission capacity of 1000 MWe.

Cost = The original estimate was $4b and last week we learned that it is going to cost $5.2b. The construction has not started yet. Further cost blow-outs will not be unexpected.

Why is all construction so expensive in Australia?

The estimated Copper String cost is $5200/MWe per kilometer. Not comparable to the unit price I reported in my 8 July 2024 post for the Chinese 12-GW UHVDC line from Xinjiang to Anhui, which is US$150/MW per kilometer. I know I am not comparing apples and apples. One would expect the unit cost to be higher for long-distance AC lines; also a higher carrying capacity would bring the unit cost down. Even then, with both caveats, the cost of building transmission lines in Australia seems to be two orders of magnitude higher than in China.

PS (6 Sep 2024): When I posted this section on LinkedIn, a good friend and a veteran in Australian energy industry commented that the CEO of MinRes (ASX:MIN), which has an internal construction division in WA, highlighted their internal steel fab cost was ~$12k/t, which is why they subcontract to facilities in China, where they supply the same scope, for <$2k/t. This is an amazing indictment on the competitiveness of the Australian steel fabrication sector. In fact, a Substack post from Brian Potter that just came in to my inbox suggests to me that this may be an Anglo-Saxon disease: Why Can't the U.S. Build Ships?

Diary

My Rucking Backpack

I posted on the Health Chat how and why I started rucking. Following Taylan’s advice, I bought a proper backpack to protect my back. It is not too heavy, only 12.5 kg but feels like weighing more after an hour of walking:

and here it is from the back:

Sunny Park Construction

I started following this minor construction project Last November, telling about the hole they dug out in our nearby shopping centre. In February, it still was a construction site. I think it is now close to completion. This is the situation last week:

Not much seems to have changed compared to the original but it took one year to get here.

Campus Construction

The construction along the lakes district is finally finished with the completion of the amphitheatre:

Pascal Hagi

They are listening to Paz on Spotify before going to sleep. I left the room for 15 minutes and when I came back, they were asleep in their cage.

What I read

Still Life by Louise Penny

If not for the Substack blog of Jeremy Anderberg, I would not know of Louise Penny. This is her first book written in 2005. The setting for this first book, and I think her subsequent books too, is a town called Three Pines. This is a fictitious small Anglophone town about two hours away from Montreal. In spite of its size, it seems to be a magnet drawing interesting people. Think of Portsea near Melbourne which is also an interesting town about the same size.

The book starts with a committee meeting discussing the upcoming arts competition. The submission from a retired school teacher, Jane Neal, creates some excitement. Then Jane Neal is found dead, killed by an arrow. This is an area where hunting by bow and arrows is quite popular and most residents are expert archers. There are also seasonal visitors. I learned a lot about the types of bows and other technicalities of archery.

Every police series must have a good detective character. Louise Penny’s detective is Chief Inspector Gamache, who is a French Canadian but speaks English “like a hereditary member of the House of Lords” we are told. Gamache is a wise and compassionate man. Most protagonists in modern detective novels have failed marriages, they live alone, sometimes have drink problems. Gamache is none of that. He is a devoted husband, he is a good team member, he tries to be a good mentor to his team and his team members love and respect him. He is a formidable but humble policeman. In this first book, his character is interesting but a bit sketchy. I am hoping it will be fleshed out in subsequent books.

Agatha Christie, A Pocketful of Rye

The next book in Agatha Christie’s Miss Marple series. A number of murders in an unhappy family seems to follow a nursery rhyme: “Sing a song of sixpence/A pocket full of rye/Four and twenty blackbirds/Baked in a pie.” There is of course a logical explanation and it takes Miss Marple to find it.

AT Index

Based on my basket of goods, Australia is only 50% more expensive this week compared to Istanbul. Below are the prices in Turkish liras for the items in the basket on 4 August 2024. The conversion rate is 1AUD=21.54TRY.

The following is the plot of AT index. The height of the bar represents how more expensive Australia is. If the prices were equal, then the bar height would have been 1.00. Australia this week is 56% more expensive than Turkey.

The code to create the above tables and the plot is in my github repository and can be downloaded if you are interested.

I described my project to ChatGPT -4o with the following query:

My interest in LLMs is to use them to produce an interactive textbook. This requires three things: (a) for a query, the LLM will manage the find the appropriate sections in the textbook and answer the query based only on the information in those sections; (b) if the query requires the LLM to follow a set of equations to produce an answer, the LLM will be able to do this. It will ask for additional information if necerssary along the way and will produce a quantitative answer; (c) In a tutoring mode, the LLM will ask the student questions from a question bank prepared by the author. The LLM will process the students' answers and will evalate their accuracy and relevance on a scale 1 to 10. Do you think ChatGPT is able to do these things? Do you know if other LLMS are able to do these things?

I summarised the main points of its answer:

Let's evaluate the feasibility of each of your requirements:

a) Query-Based Section Retrieval and Answering

- Current Capability: Can be done today with RAG or fine-tuning.

- Challenges: The key challenge is ensuring the LLM doesn’t hallucinate.

b) Following Equations to Produce Quantitative Answers

- Current Capability: OK to do simple calculations but more complex mathematical reasoning difficult without specialized training.

- Potential Solution: Integrate LLMs with external computation engines. This requires additional code and API integration.

c) Tutoring Mode with Question Evaluation

- Current Capability: LLMs can ask questions and evaluate answers if trained or fine-tuned to understand and respond to the content of a specific textbook.

- Challenges: Accurate grading that aligns with educational standards requires careful fine-tuning and may still show variability.

Other LLMs and Capabilities

- OpenAI's ChatGPT: Can do these tasks, using embeddings and external tools.

- Google's Bard, Anthropic's Claude, and Meta's LLaMA: Similarly capable but would require integration with specific retrieval and computation tools.

Conclusion

Creating an interactive textbook using LLMs is feasible but will require integrating several technologies and frameworks:

I asked a follow-up question:

Determining the accuracy of an entry by the student and determining whether a result is a hallucination, both requires an ability to assess the truthfulness of a statement without needing fine-tuning on similar questions and statements. Can an LLM today provide a one-shot judgement on the the truthfullness of an assessment on a scale 1 to 10, 1 is pure hallucination and 10 is error-free truth?

This is the summary of ChatGPTo answer:

Determining the truthfulness of a student's entry and distinguishing between hallucinations and accurate statements remains a challenging task.

1. Nature of LLMs:

LLMs do not inherently possess a built-in mechanism to assess the factual correctness of a statement unless they are specifically designed to reference a verified database or factual content.

2. Hallucinations in LLMs:

Hallucination refers to the generation of information that appears plausible but is entirely fabricated or incorrect. LLMs can hallucinate due to their reliance on probabilistic patterns rather than fact-checking mechanisms.

3. Truthfulness Assessment:

To reliably assess the truthfulness of a statement on a scale from 1 to 10, an LLM would need access to a consistent and authoritative source of truth (like a curated knowledge base) against which to compare the generated content or the student's response.

4. One-shot Judgment:

The ability to provide a one-shot judgment (i.e., an accurate evaluation of truthfulness without prior examples or training on similar statements) is limited. A one-shot capability would more likely lead to variability in assessment due to:

Context Sensitivity

Data Limitations

Training bias.

5. Current and Emerging Solutions:

Retrieval-Augmented Generation (RAG).

Fact-Checking Integrations:with external fact-checking tools or databases to cross-reference and verify statements. This is an active area of research and development.

Feedback Mechanisms.

I asked:

What is the most appropriate format for a curated knowledge base?

ChatGPT was not able to provide a categorical answer but made general statements on the need to include both structured (databases and spreadsheets) and unstructured data (text), blah blah. I followed up:

Your suggestions are information databases but not knowledge. Is there a place for knowledge bases using for example triplets or similar?

Here is its answer. I edited it for brevity:

You're right—my earlier response focused more on data storage and retrieval mechanisms rather than true knowledge representation. Using structured formats like triplets is highly appropriate. Consider the following steps:

Creating a Knowledge Graph: A knowledge graph is a network of interconnected triplets. Popular platforms are:

RDF (Resource Description Framework): Suitable for storing triplets.

OWL (Web Ontology Language): For creating ontologies.

Graph Databases: Databases like Neo4j.

Populating the Knowledge Base: Curate domain-specific knowledge using subject-predicate-object triplets. This could involve:

Manual Curation: by human experts.

Automated Extraction: extract triplets from unstructured text, such as textbooks or articles, followed by validation.

Integration with LLMs: Can be achieved through:

SPARQL Queries: A query language for RDF to fetch relevant triplets; and

Middleware Layers: translate user queries into semantic queries that can interact with the knowledge graph.

Inference and Reasoning Engines: Integrated over the knowledge base.

Using triplets and knowledge graphs provides a powerful way to represent knowledge in a structured, semantic form that LLMs can use effectively.

I had a similar conversation with the earlier ChatGPT version and this is a more categorical answer and is aligned with my own expectations. I was pleased to see Llama mentions in the same company as Google’s Bard and Anthropy’s Claude.

This may not be apparent to some readers. I will explain. Assume,

W : World total output = 1.00

X : Total US output=0.18

a : Average US share price

b : Average share price for the rest of the world

The US stock market accounts for about 70% of the global market capitalization, so