QandA

In this post I present a new tool that can answer questions based on the information in my 33 blog posts un der the general tab

In November 2023, I started this new section, AI STUFF. The AI STUFF posts will alternate with my regular posts. The AI STUFF posts will be in English and Turkish. They are written in English and translated to Turkish. Both will be on the same page.

Kasım 2023 den itibaren yeni bir bölüme başlıyorum: AI STUFF. AI STUFF gönderileri normal gönderilerimle dönüşümlü olarak yayınlanacak ve ayni sayfada İngilizce ve Türkçe olacak.

In rhe last post, I persented a Jupyter Notebook that did the following:

Create an index for all my posts under the GENERAL tag

Create embeddings for these posts using Open AI Portal.

Enter a query and retrieve the top ten matching entries from my blog posts

Geçen hafta şu aşağıdakileri yapan bir Jupyter Notebook oluşturdum:

GENERAL etiketi altındaki tüm gönderilerim (yukarıdaki liste) için bir dizin yarat

Open AI Portal'ı kullanarak bu gönderiler için embeddings vektörleri hesapla.

Blog yazılarımdan sorulan soruyla eşleşen ilk on girişi gör bul

In this post, I present a new jupyter notebook, `qa.ipynb`, that answers questions based on the information in my 33 blog posts under the GENERAL tab. It is not a chatbot beause it does not seem to remember the previous questions. You ask a question, it gives an answer. The next question is treated as a new question. I am usingOpenAI Assistants API to do this. Before I explain how the notebook works, let me give the results. Below are the copies of some questions I asked and the answers I received.

Bu yazıda GENEL sekmesi altında yer alan 33 blog sayfamla ilgili soruları yanıtlayan yeni bir jupyter notebook, `qa.ipynb'yi sunuyorum. Bu bir chatbot değil çünkü önceki soruları hatırlamıyor gibi. Her soru yeni bir soru olarak ele alınıyor. OpenAI Assistants API'sini kullanıyorum. Programın nasıl çalıştığını anlatmadan önce sonuçları vereyim. Aşağıda sorduğum bazı soruların ve aldığım cevapların kopyalarını bulabilirsiniz.

Results

The following is a record of my Q&A session. As you can see, the performance is pretty good. The only question it could not answer was the time of the day for the Turkish earthquake. Maybe my phrasing was wrong. I cannot maintain a chat yet but even now it is good.

Query : Why did Duke Energy convert a gas turbine to hydrogen?

ANSWER : Duke Energy is converting a gas turbine to hydrogen as part of a demonstration to use a hydrogen-burning turbine for energy storage. The concept involves storing excess electricity as hydrogen and then using the hydrogen-burning turbine to convert it back to electricity when needed. This demo, planned at Duke Energy’s DeBary power plant in Florida, is a collaborative effort with GE Vernova and the engineering firm Sargent&Lundy. Despite the simplicity of the concept, there are technical difficulties, and given the low round trip efficiency — calculated to be about 24% — it presents challenges in making this a commercially viable proposition【13†source】.

Query : What time of the day was the Turkish Earthquake?

ANSWER : I don't know.

Query : Who is Oliver Anthony?

ANSWER : Oliver Anthony is a previously unknown young man who gained internet fame seemingly overnight by playing his guitar and singing a song that became a phenomenon. His video was uploaded and quickly amassed 26 million views, 1.1 million likes, and 110 thousand comments as of a Sunday morning, August 20th【11†source】.

Query : Does eVinci microreactor need cooling water?

ANSWER : The eVinci microreactor does not need cooling water, as it is stated that "there is no need for cooling water" since the reactor is hermetically sealed and the heat is transferred externally by heat pipes【11†source】.

Query : Was there an earthquake in Japan in 2011?

ANSWER : Yes, there was an earthquake in Japan in 2011. It was the Tohoku earthquake with a magnitude of 8.9【11†source】.

Query : Is it expensive to build earthquake resistant buildings?

ANSWER : Building earthquake-resistant buildings does indeed incur additional costs. According to a statement by the Japanese construction firm Nice Corporation, a seven-story building with basic earthquake containment is estimated to cost about 13 to 15 percent more than a standard building without such containment features【3†source】.

Query : Was there an earthquake in Turkey in 2023?

ANSWER : Yes, there was an earthquake in Turkey in 2023. An expert civil engineer discusses the Earthquake Disaster that took place in the country in a document【13†source】.

Query : Why did so many people die in the Turkish earthquake?

ANSWER : Many people died in the Turkish earthquake because there were too many buildings in Turkey that did not comply with the general construction procedure, and even fewer that met the earthquake code requirements【13†source】. This implies that when the earthquake struck, those non-compliant buildings were more susceptible to damage and collapse, which can lead to higher casualties among the occupants.

Query : Is there going to be a war between the US and China?

ANSWER : According to the provided text, the likelihood of a war between the US and China is receding due to various reasons, including the convergence of their economic power and resultant convergences in their social and political systems in operation, though not necessarily in appearance. Additionally, the text implies that the actions of Putin may be a significant factor in preventing such a conflict【11†source】.

Explain the Notebook

To follow this section, you need a copy of the notebook file. Download is from github as explained at the end. I made it work on my blog pages but you can try it on any list of web pages that you want to use as an information repository. All you need is to create a text file with the URLs and the titles.

The following refers to lines and functions in qa.ipynb. which you can download from github as explained in the last section.

load_dotenv() Read my OpenAI key from the file .env. You will not find this hub on github. You have to create it with your own OpenAI key.

This is the line in the .env file:

OPENAI_API_KEY="sk-********"The string should include your OpenAI access key.

(Client, LLM)=init_openai()The init_ai() is in he file ute.py. It talks to OpenAI portal. Client is me. The files I upload do not have to re-loaded because Client is always the same for me, it is me.

Save the text files locally and upload to OpenAI

read_upload_list(UploadListFile)Read the URLs and the page titles already uploaded. No need to do it again. Note that the files are uploaded to your repository as the Client. Once thay are in there, you can assign them to Assistants and threads.

read_upload_id(UploadIDFile)This function gets the OpenAI internal IDs of what was uploaded in previous sessions.

TextList=read_page_list(TextListFile)read_page_list is in ute.py. It reads the URLs and the page titles from the text list file . The Text List File is data/general/general_textlist.txt. For me, it has my 33 post page addresses. You can put there other pages of your choice.

Uploading Files

for (itext,pair) in enumerate(TextList):

(url,title)=pair

txtfile=get_file_name(url)+".txt"

if txtfile in UploadList:

print("Skipping %s because it has already been uploaded"%txtfile)

continue

# Rad the text from the URL address and save into the text file

rdweb(url, datafolder+txtfile)

print("Uploading %s"%txtfile, end="")

File = Client.files.create(

file=open(datafolder+txtfile, "rb"),

purpose='assistants'Read Assistant ID from file or create new

I do not want to increase the number of assistants assigned to my account. I the costs are calculated per assistant. Therefore, I use the same assistant. When I create it, I save its ID on the disk and retrieve that ID and use it in later runs.

Generate Embeddings

At the time I am writing this, I have 33 blog posts under the 'general' tab on my Substack page. Each blog post is in a separate text file. This makes it 33 text files. The OpenAI does not allow to attach more than 20 files. Since I cannot attach all 33, I have to find the files that are most relevant to the question and attach only those files. These files can be selected in a number of ways. Before we consider different options, we need to generate embeddings. In the following cell, I do the following:

Load embeddings from the `npz` file if it exists

If there is no `npz` file, embed all files and store with metadata

Check if there are new text files in the TextList

Embed the new text files and append them to the end of the embeddings

Metadata

Metadata is what I store with the embeddings. It defines what pages they come from, etc.

The remaining tasks are pretty straightforward and well explained in the notebook. They are:

Define the prompt string that is sent to OpenAI

Logging our session into a file

Define the query string (I kept all the past queries. The last one counts of course)

Select the files closest to the query (as we did in the last post)

Create a thread and attach the closest 3 files to the thread. Note that all 33 files have already been posted. We use the OpenAI file IDs to assign the selected three to this thread.

Run this thread. I print the status of the run on the screen.

The response is put into

Client.beta.threads.messages

I tried to keep it clear and simple. If you have any queries, you can ask through the Twitter Community Group (see the next section for what this is).

Download the files and run them on your computer

I am using Python and the OpenAI API. In addition, I created an X (formerly known as Twitter) Community Group 'Building AI Tutors'. If you are interested, please join the group. I will be posting my progress there as well.

You do not need to be an experienced Python programmer but some knowledge of Python will be helpful. Below I list the steps you need to take to join me and explain how to go about it:

Install VS Code from https://code.visualstudio.com/download. This is a free code editor and development environment. It is the tool I am using therefore should be able to help you if you have problems.

Install Python from https://www.python.org/downloads/. At the time I started this notebook,

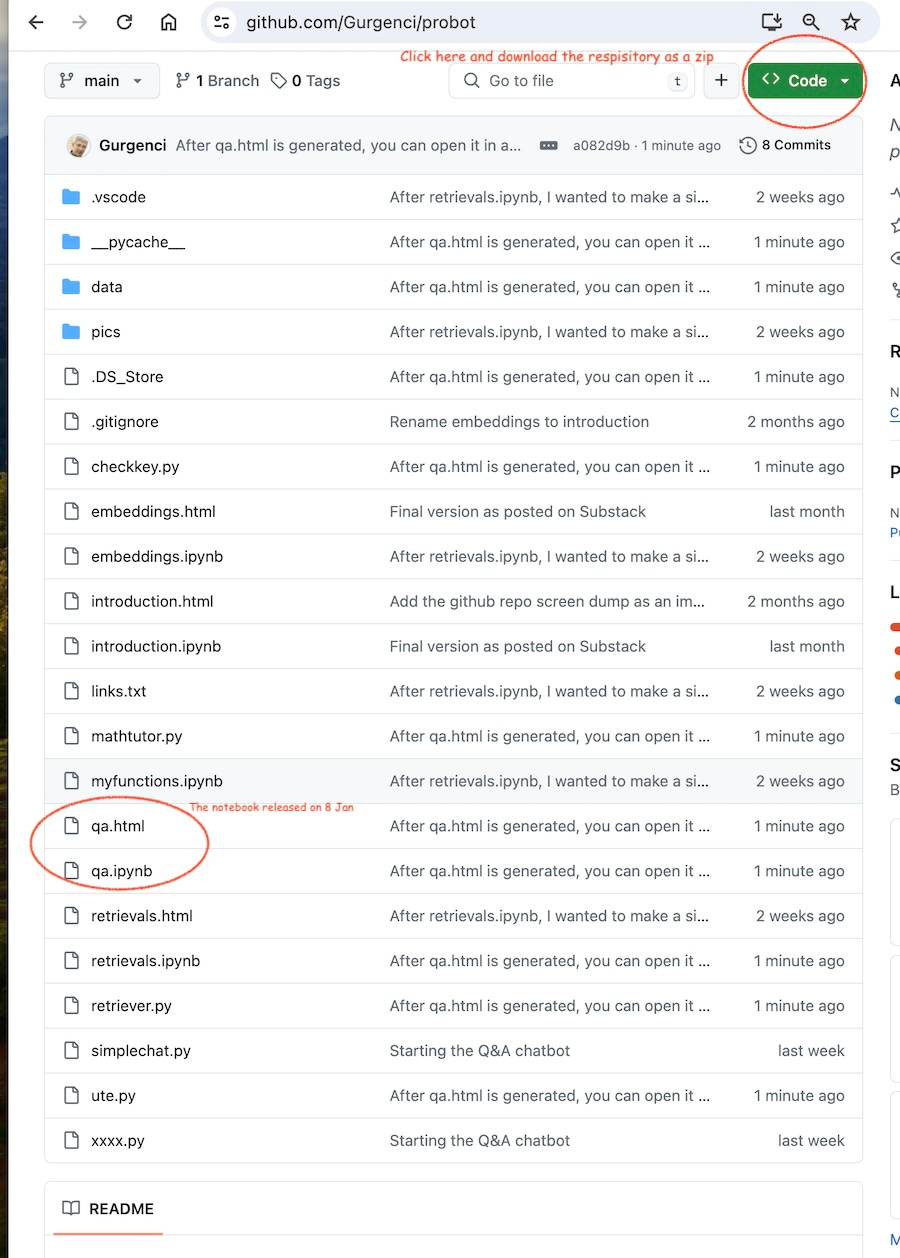

Download my

probotfiles from my account on github. I do not think you need to have a github account to do this. The name of the project is probot. You can download the wholeprobotfolder as a zip pack and unzip to your local disk.

Python ve OpenAI API kullanıyorum. Ek olarak, bir X (eski adıyla Twitter) Topluluk Grubu 'Yapı Yapay Zeka Eğitmenleri' oluşturdum. Eğer ilgileniyorsanız lütfen gruba katılın. Gelişmelerimi orada da paylaşacağım.

Deneyimli bir Python programcısı olmanıza gerek yok ancak biraz Python bilgisi faydalı olacaktır. Aşağıda bana katılmak için atmanız gereken adımları listeliyorum ve bunu nasıl yapacağınızı açıklıyorum:

VS Code'u https://code.visualstudio.com/download adresinden yükleyin. Bu ücretsiz bir program ve benim kullandığım araçtır, dolayısıyla sorun yaşamanız durumunda size yardımcı olabilirim.

Python'u https://www.python.org/downloads/ adresinden yükleyin.

Tüm program ve detaylı anlatımları github'daki hesabımdan indirin. Bunu yapmak için bir github hesabınızın olması gerektiğini sanmıyorum. Projenin adı probot. Probot klasörünün tamamını zip paketi olarak indirebilir ve zip dosyasını yerel diskinize açabilirsiniz